Instagram live streamers can assign their own moderators of the five instagram live streamers can assign their own moderators vs mediators instagram live streamers can assign their own moderators discord instagram live streamers can assignments instagram live streamers cannon instagram live streamers fortnite instagram live streamers recorders instagram live streamers on youtube instagram live streamers for drag madison lecroy instagram live instagram live video noah cyrus instagram live bikini

Instagram Live Streamers Can Assign Their Own Moderators to Clean Up Chat

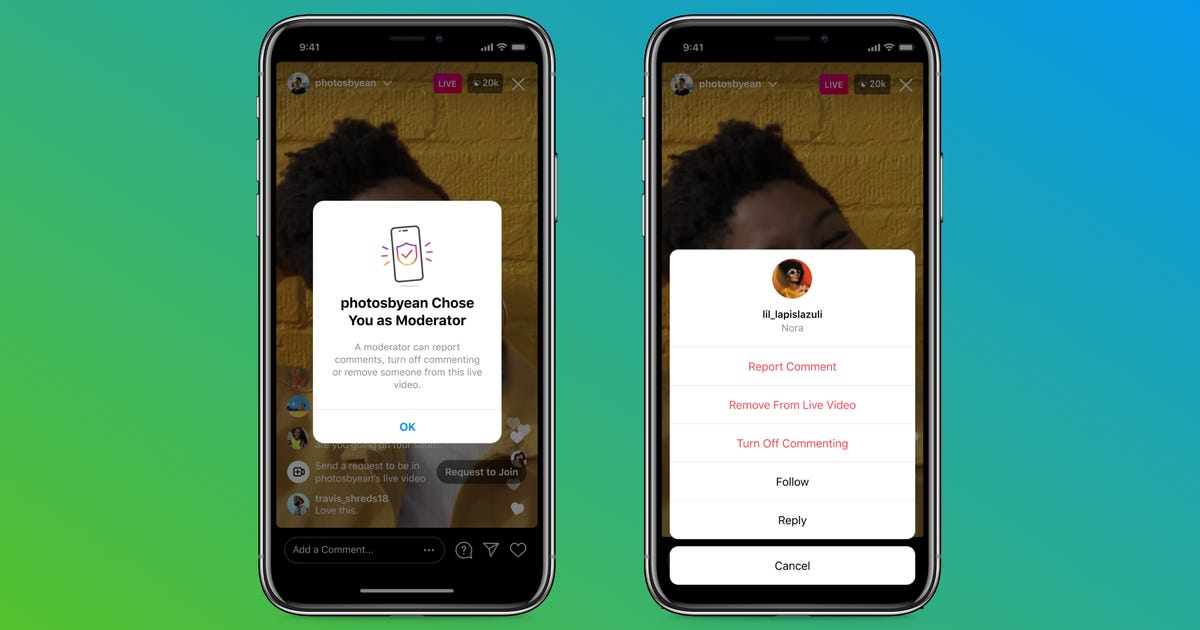

Instagram Live streamers can now assign someone to moderate their stream as it happens, freeing up broadcasters to focus on their content.

Instead of having to do all the moderating themselves, streamers can deputize someone to clean up chat by reporting comments, turning off comments for some viewers, or even booting them from the stream entirely. To assign their moderator after starting an Instagram Live, streamers tap the "..." button in the comment bar and either choose from a list of suggested accounts or manually search for one.

Rumors of assigned moderators first appeared in November when leaker Alessandro Paluzzi tweeted screenshots showing the feature in action. Instagram says it's adding the capability to help streamers keep their broadcasts safe and civil for them and viewers.

Read more: Instagram Boss Says App Will 'Rethink What Instagram Is' in 2022

Instagram parent company Meta has a lengthy history with moderating its social networks and had struggled with policing extreme content during the pandemic on Facebook. Even so, what it does allow has been challenged, as studies linked youth use of Instagram along with rival social media platform TikTok with body image and eating disorders.

The company slowly added more tools for administrators of groups to moderate comments themselves back in June, though reports later in the year said self-harm content is easily found while bullying and harassment are still prevalent on Meta's social media platforms. Still, adding livestream moderators -- especially trusted accounts who can clean up chat and remove viewers as aggressively as needed -- gives content creators tools to keep their own spaces safer than before.

Source

Blog Archive

-

▼

2022

(148)

-

▼

November

(47)

- 2021 Holiday Gift Guide: Stop Making These Mistake...

- Best Baby High Chairs For 2022

- AMD Radeon RX 6700 XT Launches For Fast 1440p Gami...

- Best Personal Loans For August 2022

- Russia Says It Blocked Facebook

- All Netflix's Original Movies For 2022 In One Hand...

- US Declares Monkeypox A Public Health Emergency

- Try On An Apple Watch Series 6 For Less With This ...

- This Mars Optical Illusion Is Tripping Me Out: Pit...

- Instagram Live Streamers Can Assign Their Own Mode...

- Facebook Vs. Apple: Here's What You Need To Know A...

- Oppo's New Foldable Phone May Have Solved The Crea...

- Xiaomi's Mi 10 Pro Looks Sleek And Modern With Its...

- The Best Printers, According To The CNET Staff Who...

- Dodge Charger Super Bee Offers Standard Drag-Strip...

- Motorola Will Unveil Its Latest Flagship Phone On ...

- Monitor OS X LaunchAgents Folders To Help Prevent ...

- 9 Great Reads From CNET This Week: Webb Telescope,...

- Meet Vivo, The World's Fifth Largest Smartphone Maker

- Google's $100 Million Settlement: There's Still Ti...

- Take Better Vacation Photos: 7 Tips From A Travel ...

- Billions Of People Globally Still Can't Afford Sma...

- Motorola One Hyper Has A 32-megapixel Pop-up Selfi...

- Costume Of Young Jedi Riding A Tauntaun Wins Hallo...

- Best MagSafe And Magnetic IPhone Accessories For 2022

- Alienware X15 R2 Review: A Lean And Hot Gaming Laptop

- TikTok's In-App Browser Can Monitor Your Keystroke...

- Tech Experts Call On Lawmakers To Push Back Agains...

- 'Elvis' Review: Over-the-top Biopic Coming To HBO Max

- Xiaomi Mi 6 Review: The Best Phone You Can’t Buy...

- Twitter Bans Climate Change Denial Ads

- Samsung Shows Off New Galaxy S22 Phones And Galaxy...

- This Snapchat Filter Can Help You Learn American S...

- TikTok Is Reportedly Testing 5-minute Videos

- Xiaomi Redmi Note 5 Is An Android Phone For The Ma...

- Snapchat Accused Of Exposing Kids To 'profoundly S...

- Stop Missing Out On New Episodes Of Your Favorite ...

- The Inflation Reduction Act Includes These Importa...

- Update Your IPhone: Apple Releases Security Patch ...

- 6 Tips To Use If You Want To Fall Asleep Faster

- Pandemic Parents, Hurry It Up, These SNL Ladies Wa...

- Netflix Adds 'Two Thumbs Up' Rating For Content Yo...

- Cybercriminals Steal $100 Million In Cryptocurrency

- Intel's Next-gen Raptor Lake PC Chip Is Due For 20...

- IOS 16 Adds New Features To Apple Maps On Your IPhone

- Kanye West, Pete Davidson And Eazy: That Shocking ...

- UK Parliament Cites 'clear Evidence' Huawei Collud...

-

▼

November

(47)

Total Pageviews

Search This Blog

Popular Posts

-

Speech pathologist job openings, birth to 3 speech pathologist job state of kansas, speech pathologist job new zealand, traveling speech pat...

-

Kerastase oleo relax treatment for hair, kerastase oleo relax serum, kerastase oleo relax masque, kerastase oleo relax hair products, kerast...

-

Br1m 21 years tobymac, br1m 21 years lyrics, br1m 21 years olds, br1m 21 years toby, br1m 21 years ago, br1m 21 years anniversary, br1m 21 y...